DAQ Computing Center

of the BM@N experiment

OS: AlmaLinux 9

Exp. Software: CVMFS, /cvmfs/ct60068-wordpress-0to18.tw1.ru/

NCX EOS Storage: /eos/lpp/nica/bmn/

MICC EOS Storage : /eos/lit/nica/bmn/

SLURM batch system: 1 128 cores

Telegram Group

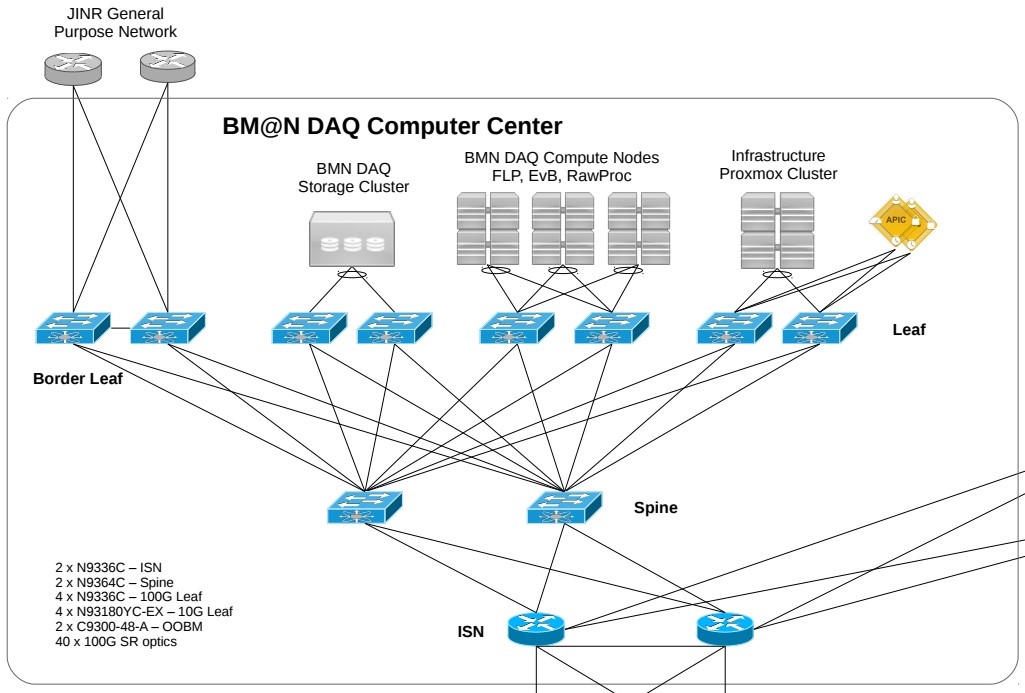

The on-line DAQ data center of the BM@N experiment has several primary and secondary functions. The primary function is to get data from the Data Acquisition System. The function of not less importance is to acquire information for monitoring and diagnostics of the main units and detectors of the BM@N experiment. On the other hand, between Nuclotron sessions the DAQ computing nodes are partially idle and can be used for offline BM@N data processing.

To have an access to the BM@N DAQ Computing Center, you need to get JINR SSO account as described here. Then CICC (JINR Central Computing Complex) account should be requested from the administrators here. Once you have registered the JINR CICC account, you can login with the account on the interactive machine:

ssh [username]@ddc.jinr.ru

Installation of the BmnRoot software is described here at the section “Installing the BmnRoot framework”. The necessary packages for BmnRoot, FairSoft and FairRoot are already located on the CVMFS file system. Export their following paths once before the BmnRoot compilation (before running SetEnv.sh).

export SIMPATH=/cvmfs/ct60068-wordpress-0to18.tw1.ru/fairsoft/jan24/x86_64-alma9/ export FAIRROOTPATH=/cvmfs/ct60068-wordpress-0to18.tw1.ru/fairroot/v18.8.1/x86_64-alma9/

The storage system of the DAQ center includes the following user disk spaces:

- User home directory: /home/[username], which should not be used for parallel processing.

- Ceph space /ceph/bmn/work/[username] as a faster storage system for temporary results.

- EOS space of the NCX cluster mounted at /eos/lpp/nica/bmn/users/[username],.

- EOS space of the MICC (LIT) cluster mounted at /eos/lit/nica/bmn/users/[username],.

The BM@N experimental and simulated data are located at the EOS distributed storage system (EOS documentation) of the NCX cluster mounted at /eos/lpp/nica/bmn/ and central MICC EOS storage mounted at /eos/lit/nica/bmn/. To work with EOS data, in addition to the possibility of direct using the mounted spaces, you can also employ XRootD and EOS protocols from any remote place, e.g. xrdcp and eos cp command to copy EOS files, or use root://ncm.jinr.ru/ (for NCX EOS) and root://eos.jinr.ru/ (for MICC EOS) prefixes to directly open remote EOS files in the ROOT environment (like root://ncm.jinr.ru//eos/nica/bmn/exp/…).

To run your tasks on the DAQ Computing Center, you can use SLURM batch system. The Center provides a common queue named batch with 1 128 Intel Xeon processor cores. If you know how to work with SLURM (SLURM docs), you can use sbatch command on the cluster to distribute data processing. A simple example of such user job for SLURM can be found here.

NICA-Scheduler has been developed to simplify running of user tasks without knowledge of SLLURM. You can discover how to use NICA-Scheduler here.